So I’ve been catching up on my paper reviewing duties this weekend. To those outside the Ivory Outhouse, “reviewing a paper” means “anonymously criticizing a research article considered for publication in a scientific journal”. (For those of you familiar with the process, you can jump to the text after the first figure.) Here’s how science gets published: a group of scientists do research. They write up their findings as an article (typically called a “paper”), and submit it to a journal. An editor, who hopefully knows his/her business, considers whether the paper is a good fit for this journal. Is the topic of the paper typical of what this journal publishes? Are the findings interesting and exciting enough? (The words novel and impactful get tossed around a lot at this stage). Is it well-written? Does it conform to journal standards of article length and format? Different journals have different thresholds for these criteria, with editorial rejection rates anywhere between 5 and 80% of submitted papers.

I finished reading Wool / Omnibus a few days ago. If you want one captivating book (or rather pentalogy) to read by the end of the year, then this is it. The five books grew from a short story, Wool, published electronically. The author, Hugh Howey, continued to publish Wool 2-5, and then compiled all five books into one volume, which he published independently. With hardly any PR from his side, the Wool series became incredibly popular, and it is among the top Kindle bestsellers this year. In fact, now that Simon & Schuster have bought the dead-tree publication rights in the US, amazon.com stopped selling the independently-published hardback and paperback editions, making my copy something of a collector’s item. You can still get the Kindle though.

What is Wool / Omnibus about? I would like to say that what follows is a spoiler-free review, but as any review, you will still get some idea of the book’s plot from me before the story unfolds by the author. I read the book without even looking at the back cover blurb, and I believe that approaching this book in complete ignorance, as a tabula-rasa, will give you the best experience. One of the great joys in reading the Wool books was the way Howey reveals his world to us. A large part of Wool is about the choices we make in what we want, and do not want to know about the world we live in. And in this respect, Howey blends story content and storytelling form into the best read I have had… well… longer than I can remember.

So I will leave you with three Wool-world like choices: 1. you can stop here (and get the book); 2. you can see the video claymation of Plato’s Cave allegory below. This will give you an idea of one of the prevalent themes of Wool, without actually giving you any plot details; 3. you can see the video and / or read the text following it: very few plot details after the jump, but you are no longer a blank slate.

How much do you want to know?

It’s a post-apocalyptic world. Thousands of people are living in a mostly underground structure called the Silo. They do not know their history: as far as they know, the Silo was always there, placed by benevolent gods. Their only connection to the outside world is a set of cameras that project the dead world outside. But are the camera really showing what is out there? There was an uprising of Silo residents in the distant past, but nobody remembers much, and even the powerful IT section that keeps all historical records does not have them. Or maybe IT does have them. The Pact, the Silo’s constitution, is incredibly restrictive: to control population growth, only a few lucky couples can procreate based on a lottery, you cannot even love without registering as a couple. To maintain law & order, you are not allowed to express any curiosity about the outside. If you do, you get what you wish for. The people that go outside die within view of the cameras, but not before they enthusiastically clean them… and why would a condemned person provide a service to the society that killed him?

Reading Wool 1, I was reminded of Plato’s Cave allegory: what is reality? Given the ability to learn more, do even we want to ? In Wool 2-5, things develop, and the books explore many more themes: love, human curiosity, loyalty, and social contracts: how much is the individual responsible for the welfare of the populace? Is it ethical to keep vital knowledge from the population even if, once certain things are revealed they are in danger of annihilating their own society? Can the social fabric survive only of its legacy is kept secret?

OK, I should really stop here. Over/out. Get the book.

This commentary appeared in Nature recently. Title: Ancient Fungi Found in Deep Sea Mud. Quote:

Researchers have found evidence of fungi thriving far below the floor of the Pacific Ocean, in nutrient-starved sediments more than 100 million years old….To follow up on earlier reports of deep-sea fungi, Reese and her colleagues studied sediments pulled up from as deep as 127 metres below the sea floor during an expedition of the Integrated Ocean Drilling Program in the South Pacific in 2010. They searched the samples for fungal genetic material and found sequences from at least eight groups. The team succeeded in growing cultures of four of the fungi.

Really cool. Fungi from 127 meters below the sea floor. We can learn a lot from studying fungi from such harsh conditions, about how multicellular eukaryotic life manages to survive — and apparently thrive– even in the most inhospitable environments. But contrary to what the title suggests, living below the ocean floor does not make those fungi any more “ancient” than the fungi living in my back yard. Both the fungi in my back yard, and those found by Brandi Reese and her colleagues in the ocean , evolved from a common ancestor, which presumably lived as far back as 1.4 x 109 years ago. None of the current living organisms are “ancient”, because, by definition, they live today. All of today’s organisms have ancestral lines stretching into the past, and all have been subjected to natural selection. Granted, it is much harder to survive and thrive 127 meters below the bottom of the ocean than in my back yard, but that does not make the species living beneath the ocean floor ancient. It makes adapted to a harsh environment which is oxygen, energy, and nutrient poor. This, by itself, is quite exciting and interesting.

I received this email today from Gary Battle at PDBe . Very cool:

Nobel Prize Quips: Explore the structure of B2AR bound to its G-protein.

Today, Nobel Laureates Robert J. Lefkowitz and Brian K. Kobilka take

center stage in Stockholm where they will receive their Nobel Prize

Medals for their studies of G-protein–coupled receptors (GPCRs).To mark this occasion we have written a special Quips article which

explores the Nobel Prize winning structure of B2AR bound to its G-protein.http://pdbe.org/quips?story=B2AR

GPCRs are signalling proteins which enable cells to communicate with

each other and the surrounding environment. They provide the molecular

framework and mechanism for the transmission of a wide variety of

signals over the cell membrane, between cells and over long distances in

the body. In this Quips you will learn how the structure of B2AR bound

to its G-protein helped explain how binding of a small molecule on one

face of B2AR activates the G-protein.If you have an interesting structure whose story you would like to tell

(with our help) in the form of a Quips article, please contact us at

pdbe@ebi.ac.uk

Our lab has a new project and website up. The project is BioDIG: Biological Database of Images and Genomes. BioDIG lets you combine image data and genome data of, well, just about anything which you can make images and have a genome, or partial genomic information.

You can upload your image, annotate (tag) parts of it, and associate the tags with one or more genes. Clicking on the tags will take you to a genome browser centered on the genomic region of interest. You can use this, for example, to take cellular images and point out specific areas of protein expression. Or cell pathologies associated with mutations. Or your favorite knockout mouse… anything that has a phenotype and an associated genoype. BioDIG allows for collaborative work, so collaborators can work together on images.

An example, very much in development database is here: MyDIG, the Mycoplasma Database of Images and Genomes. Go here for an example of a tagged image that leads you to the genome browser. Or browse through the screenshots:

For the the genome browser we used GMOD (Generic Model Organism Database) technologies. The genome browser is GBrowse; the genome database is Chado + a few hacks of our own (like an SQLite layer that gets things done faster). This is a good opportunity to thank Scott Cain and all the people of the GMOD community who have been helping us.

BioDIG is very much work in progress, and when I say “we” I mean my graduate student, Andy Oberlin, who developed and coded, and my programmer, Rajeswari Swaminathan: I’m just trying to keep up with them. Right now we have a paper in review, and a preprint on Arxiv.org.

BioDIG is now mature enough to start getting more programmers and users involved. So if you are interested in linking images to genomes, or , more generally, genotypes to phenotypes, please visit biodig.org. The code is up on our github repository, under and open source license. Feel free to fork, and get busy helping. We have a whole list of todos and a development plan. We also love to hear new ideas. Contact Andy, Raji, or myself if interested in contributing, or in setting up your own BioDIG-enabled website.

Dave Brubeck has moved on to a new time signature. Let us Take Five… and listen to Blue Rondo à la Turk.

I didn’t have a clever title. “PeerJ: a new kid on the block” was already taken by Bora Zivkovic. “PeerJ: Publish there or suffer” is aggressively counterproductive. “PeerJ is awesome”. Meh.

So: PeerJ. A new open access scientific journal.

PeerJ is the brainchild of Peter Binfield who was the managing editor of PLoS-one, and Jason Hoyt who was the Chief Scientist and VP of R&D of Mendeley. I hope that this will be a working solution to the main problem that I, and many others, have identified with Open Access publishing: the financial burden to the researcher. With typical costs of $2000-$4000 per paper, the OA model still turns many people away: why should I publish OA when you can get a couple of laptops instead? Even if I do have the money (which many don’t).

Things will change Monday, December 3, when PeerJ will start accepting publications. And I believe that they will change for the better:

Journal subscription fees made sense in a pre-Internet world, but now they just slow the progress of science. It’s time to change that. PeerJ has established a new model for open access publishing: instead of charging you each time you publish, we ask for a single one off payment, giving you the lifetime right to publish articles with us, and to make those articles freely available. Lifetime plans start at just $99

That’s right. You can publish one paper per year in PeerJ for a one-time fee of $99! You can also go higher, with two publications/year at $199 (again, that’s a one-time fee) and an unlimited plan for $299. That’s a pretty sweet deal. Note that every author on the paper must have a membership plan in order to publish, Also, you can pay after acceptance, but that carries a premium. So if you are on the 1 paper/year plan, but you only pay after your paper is accepted you pay $30 more.

I like this proposed system, for several reasons: first, it’s cheaper. Although a typical paper, 2 labs, 4 people each will still pay the same amount for their first paper as for a regular OA paper, everyone benefits for a lifetime.

Also, PeerJ indirectly monetizes peer-review: you need to review papers to maintain your plan. Everybody is talking about how peer-review is laborious and not being rewarded, but PeerJ actually gives reviewing a tangible value.

How can they do it for such a low price? Well, from the FAQ:

Don’t forget that papers typically have more than one author, authors tend to publish in more than one venue over time, and some will publish fewer papers than others. In addition, our cost structure is lower than more traditional publishing companies (which might have legacy systems to deal with, or be aiming to make an excessive profit). When you combine those facts, the finances do work out.

Just how much of a change will be effected by PeerJ, only time will tell. And it takes quite a bit of time to change publication culture and sensibilities. PLoS-1 received the wide recognition of its value when its first impact factor was a surprising 4.01, higher than was expected for a journal that eschews perceived importance, and judges submissions only by their scientific rigor. As a colleague told me once: “when I want something out there quickly, without sacrificing my good name, that’s where I submit”. PeerJ will enable you to do that, and not sacrifice your research budget.

What about quality? Well, I looked into the editor list in Bioinformatics, and there are some leading names there including Charlotte Deane, Mikhail Gelfand, Kentai Nakai, Alfonso Valencia, Cristophe Dessimoz, Folker Meyer and others. I don’t imagine quality being compromised, which is unfortunately a growing concern in the Open Access field.

Another good thing: the PeerJ enterprise is backed by O’Reilly media, and Tim O’Reilly is on the board. O’Reilly (the man and the publication group) has supported openness of information, especially software, for a very long time. So it’s nice to see this particular publisher backing PeerJ.

Some time ago I wrote that the Open Access will become popular when it is convenient to use:

…it is convenience, rather than ideology, that will determine the adoption of Open Access. How much does it cost? Is it in a “good” journal?

I think that with PeerJ, this “revolution of convenience” has just happened.

See what happens when a Capuchin monkey receives unequal pay:

The article in Nature (2004): Monkeys reject unequal pay Sarah F. Brosnan & Frans B. M. de Waal

Today is the last day of Open Access Week, where all things Open Access are heralded. William Gunn gave a great talk here at MU on how open access is changing scholarship. (And a big thank you to our librarians Jen Waller & Kevin Messner for hosting William!) I have posted about Open Access before, mainly as a dissident voice about some aspects of the process. To make things clear: I fully support the idea, and the reason I am being somewhat critical is that now Open Access is established as a successful and leading publication model, it is time to move on and have OA practitioners fulfill the promise inherent in OA as a sensible and fair one.

But what is Open Access, exactly? I never explained it since I assume that if you are reading this blog you either (1) know what it is or (2) have the wherewithal to find out for yourself. But after Gunn’s talk here on campus, I realized there are quite a few people who still misunderstand OA. Common misconceptions were that open access publications are free, or not peer-reviewed (so inherently of lesser quality than traditional publications), and that OA publications are not economically sustainable by scientific society publications.

So here is an entertaining and informative video, from Piled Higher and Deeper web comics, explaining Open Access. Narrated by Nick Shockey of the Right to Research coalition and Jonathan Eisen, professor at the University of California, Davis and editor in chief of PLoS Biology. Bonus: Jonathan Eisen as an Open Access Che Guevara in 4:54. Enjoy. Thanks to Mickey Kosloff for bringing the video to my attention.

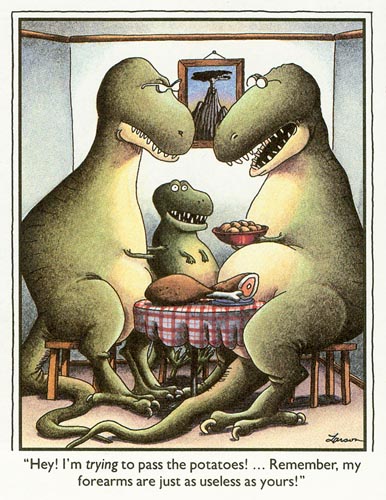

Let’s get this clear: Tyrannosaurus rex, the best selling figurine of class reptilia is not my favorite bad-ass top-of-the-food chain predator. Come on. Did you see its arms? I mean…

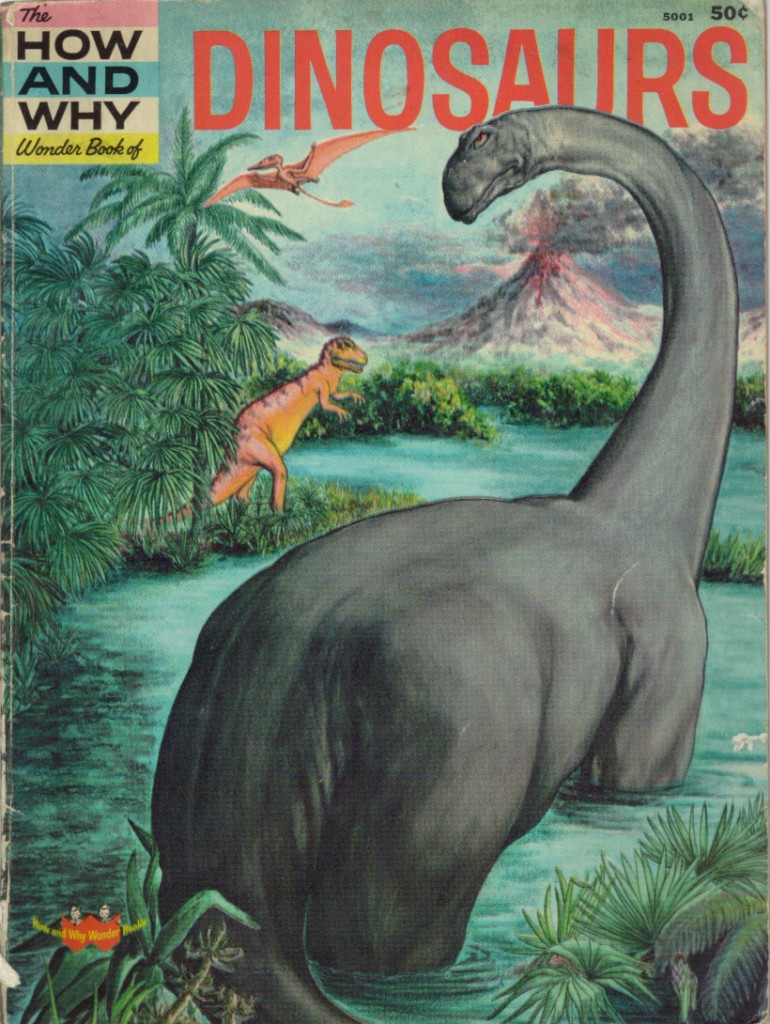

As a kid, I always thought the Allosaurus was much cooler. For one thing, it was on the cover of my favorite dinosaur book, “The How and Why Wonder Books of Dinosaurs“. Along with a Pteranodon, Rhamphorhynchus (thanks for the correction, Charlie), making it one of the three most important dinosaurs, ever! Admittedly, the third was a Brontosaurus, a dino shown to be not extinct, but never to have actually existed.

Continue reading DNA half life, and my dream of an Allosaurus Army →

My previous post on ROSALIND, a bioinformatics learning site, got picked up by the Slashdot community. A discussion came up on careers in Bioinformatics, and the Slashdot user rockmulle made some interesting observations on career paths in bioinformatics, which I have copied here. While brief and therefore omitting many important details (research at a university or at a research institute may be dramatically different), I think it captures the spectrum of employment in the field quite well, as well identify flaws in that exist in come training programs. Thanks rockmulle / Chris, whoever you are.

I run a bioinformatics software company, have been in the field for over a decade, and have worked in scientific computing even longer.

I’ll start with a quick answer to the bubble question: there are already too many ‘bioinformatics’ grads but there are not enough bioinformatics professionals (and probably never will be). There are many bioinformatics Masters programs out there that spend two years exposing students to bioinformatics toolsets and give them cursory introductions to biology, computer science, and statistics. These students graduate with trade skills that have a short shelf life and lack the proper foundations to gain new skills. In that respect, there’s a bubble, unfortunately.

If you’re serious about getting into bioinformatics, there are a few good routes to take, all of which will provide you with a solid foundation to have a productive career.

The first thing to decide is what type of career you want. Three common career paths are Researcher, Analyst, and Engineer. The foundational fields for all are Biology, Computer Science (all inclusive through software engineering), and Statistics. Which career path you follow determines the mix…

Researchers have Ph.D.’s and tend to pursue academic or government lab careers. Many research paths do lead to industry jobs, but these tend to morph into the analyst or engineer roles (much to the dismay of the researcher, usually). Bioinformatics researchers tend to have Ph.D.s in Biology, Computer Science, Physics, Math, or Statistics. Pursing a Ph.D. in any of these areas and focusing your research on biologically relavent problems is a good starting point for a research career. However, there are currently more Ph.D.s produced than research jobs available, so after years in school, many bioinformatics-oriented Ph.D.s tend to end up in Analysis or Engineering jobs. Your day job here is mostly grant writing and running a research lab.

Bioinformatics Analysts (not really a standard term, but a useful distinction) focus on analyzing data for scientists or performing their own analyses. A strong background in statistics is essential (and, unfortunately, often missing) for this role along with a good understanding of biology. Lab skills are not essential here, though familiarity with experimental protocols is. A good way to train for this career path is to get an undergraduate degree in Math, Stats, or Physics. This provides the math background required to excel as an analyst along with exposure to ‘hard science’. Along the way, look for courses and research opportunities that involve bioinformatics or even double major in Biology. Basic software skills are also needed, as most of tools are Linux-based command line applications. Your day job here is working on teams to answer key questions from experiments.

Bioinformatics engineers/developers (again, not really a standard term, but bear with me) write the software tools used by analysts and researchers and may perform research themselves. A deep understanding of algorithms and data structures, software engineering, and high performance computing is required to really excel in this field, though good programming skills and a desire to learn the science are enough to get started. The best education for this path is a Computer Science degree with a focus on bioinformatics and scientific computing (many problems that are starting to emerge in bioinformatics have good solutions from other scientific disciplines). Again, aligning additional coursework and undergraduate research with biologists is key to building a foundation. A double major in Biology would be useful, too. To fully round this out, a Masters in Statistics would make a great candidate, as long as their side projects were all biology related. Your day job here is building the tools and infrastructure to make bioinformatics function.

All three career paths can be rewarding and appeal to different mindsets.

If you haven’t followed the NPR series on gene sequencing over the last few weeks, it’s definitely worth listening to. I also did a talk a few years back at TEDxAustin on the topic that makes the connection between big data and sequencing ( http://bit.ly/mueller-tedxaustin [bit.ly] ). Affordable sequencing is changing biology dramatically. Going forward, it will be hard to practice some parts biology without sequencing and sequencing needs informatics to function.

Good luck!

-Chris

I just learned about this one: ROSALIND is a really cool concept in learning bioinformatics. You are given problems of increasing difficulty to solve. Start with nucleotide counting (trivial) and end with genome assembly (not so trivial). To solve a problem, you download a sample data set, write your code and debug it. Once you think you are ready, you have a time limit to solve and provide an answer for the actual problem dataset. If you mess up, there is a timed new dataset to download. This thing is coder-addictive. Currently in Beta, but a lot of fun and seems stable.

More problems to come, and they also have post problem-solving discussion boards (no, you cannot take a peek at the answers), and a discussion board for new problems. From their “About” page:

The project’s name commemorates Rosalind Franklin, whose X-ray crystallography with Raymond Gosling facilitated the discovery of the DNA double helix by Watson and Crick. […]

Rosalind is a joint project between the University of California at San Diego and Saint Petersburg Academic University along with the Russian Academy of Sciences. Rosalind is partly funded by a Howard Hughes Medical Institute Professor Award and a Russian Megagrant Award received by Pavel Pevzner.

Did I say addictive? Yes. Make sure you clear some time for this one.