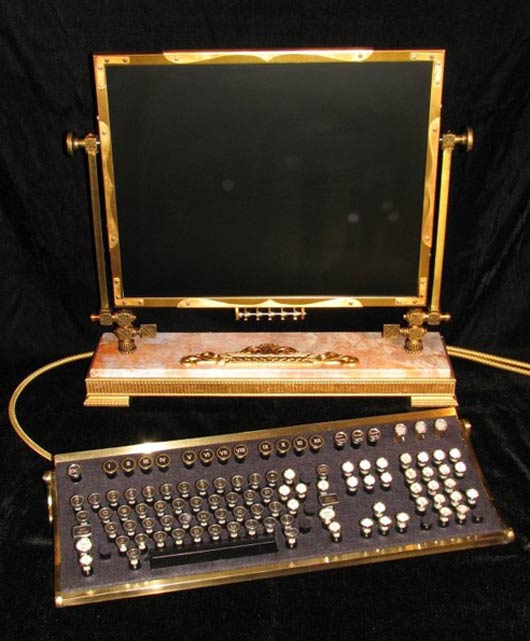

ISMB 2012 was an excellent meeting. The organizers were celebrating the 20th anniversary of ISMB meetings, and have carefully chosen the keynote speakers to reflect not only the latest advances in bioinformatics, but also to talk about the past accomplishments, how they led us to where were are now, and what the future may hold. Larry Hunter‘s retrospective talk was very good in that respect: the slides going from punch cards through Apple II and today with his hilarious narrative were reasons enough to listen to him speak.

Over the next few days, I will post bits and pieces from my experience at ISMB. To start off, here are Russ Altman‘s (@rbaltman) slides from the Grant Writing Workshop that Yana Bromberg has organized. Russ is a great science communicator, and here he was at his best: teaching scientists how to effectively communicate their research ideas to other scientists, the goal being to get money for said ideas. His slides were simple and self-contained, so I will just jot down a few points made by Russ at the meeting after the jump.

1. Follow the rules: this is not the time to get creative with fonts, margins, etc. Your grant will get trashed by an autocheck system if you do not follow the exact rules. Also, talk tot he program officer. Get on their radar, and make sure you know that you are sending the grant to the correct program.

2. The reviewer is in a bad mood: they take on a large pile of grant to review, wait too long, and are mad at themselves, the agency, their travel. They may have read a few grants before yours, and if those were not very good, they are mourning the future of science by now. They are looking for a reason to not read your grant through and go to the next one on the pile. Therefore, 1) do not give them a reason to trash your grant; 2) try and brighten their day, and restore their faith in scientists.

3. Make it beautiful: You are a reviewer. You just worked your way through a page which is one solid block of text.. and nothing is more disheartening to the reviewer than turning a leaf and getting more of the same. As a proposal writer, ensure that you break the monotony. Use bullet points, titles, etc to break the monotony and send their eyes to the important stuff (bold is good for the latter, but don’t overdo it). And, of course, an image is worth 1000 words. Literally, sometimes.

4. Good ideas are hard to come by. Russ: “I can’t help you with that one, but see bullet point 2”.

5. Abstract structure is critical: address all points made in the slides. Why is this research are is important, what unidentified problem is there, and how are you going to solve that problem.

6. Specific Aims: allow yourself maximum flexibility after the award. List what you are going to do, as the “how” is likely to change.

7. Literature review: 1) show that you are smart enough to be in the field; 2) cite your potential reviewers, or known ones. The NIH gives a roster of who is on your study section.

8. Prior results: you may not want to present the proposed work as “done”, so you may want to place some prior results in the Methods section, as appropriate. Don’t lie though: if a Specific Aim has already been completed, you should design another Specific Aim.

9. Methods: pretty much a self-contained slide. My own take: work hard to make the methodology accessible to an audience outside your field. It is easy to get caught up in jargon and verbal shortcuts known to you and to the 10 other people worldwide performing these methods. Don’t yield to the temptation to make your grant shorter by obfuscating the description of the methods.

10. Other things: fairly self-explanatory. I might add that naming a person (postdoc or grad student) on a grant rather than a “TBA” seemed to do good based on the comments given on grant which I got funded.

Thanks, Russ!