Four years ago I wrote about how Open Access would be adopted if it were convenient. Polls at the time showed that few scientists actively seek to publish OA, even though many support it. Reasons given, in no particular order: aiming for journals that were not OA and high publication fees. My conclusion was that researchers will try to publish OA not from any OA ideology, but from convenience. I should have added also palpable gain (e.g. in publication venue prestige).

So for open access week, I decided to revisit that post “The Revolution Will be Convenient“. Has anything changed since?

No and yes. In a 2014 survey of Canadian researchers, immediate Open Access received only 3.3 out of possible 100 importance points. OA publishing after an embargo period received 2.2 points. The top consideration for choosing a publication venue were impact factor (26.8 points) and journal reputation (42.9). So in that sense, (and assuming the Canadian survey reflects the attitudes of scientists from other countries) little has changed. While there may be a positive attitude towards publishing immediate OA, there is little incentive to do so. Scientists want to make a splash every time they publish, but most do not seem to equate Open Access with “making a splash”. Researchers are still mostly more concerned about promotion and grant review committees reading where they published rather than growing the audience reading what they published.

“The survey suggests however, that there is a disconnect between researchers’ apparent agreement with the principle of open access (i.e., that research should be freely available to everyone) and their publishing decision criteria. Although the vast majority of researchers (83%) agree with the principle of open access, the availability of open access as a publishing option was not an important decision criterion when selecting a journal in which to publish. In this regard, availability of open access ranked 6th out of 18 possibility criteria. It was eight times less important than impact factor and thirteen times less important than journal reputation when selecting a journal” (Source)

But it seems like things are changing, although those changes are being made from the establishment rather than from the people. So not exactly a revolution: the people still overwhelmingly favor prestige over access. Increasingly, funding agencies and universities are mandating OA publication, although not immediate, author-pays “gold” open access. The mandates are mostly for self-archiving, or “green” open access. The NIH has been mandating publication within 12 months of all NIH research. Other US Federal agencies have been directed to develop a policy for expanding access to Federally funded research by the White House Office of Science and Technology Policy. Open access mandates by funding agencies are not a done deal yet, but the wind seems to be blowing in that direction. Many journals are allowing now for self-archiving of preprints and pre-publication copies. Green open access is convenient, free of publication charges, and mostly does not interfere with the main consideration that researchers have for publication venue: the “high profile” journal.

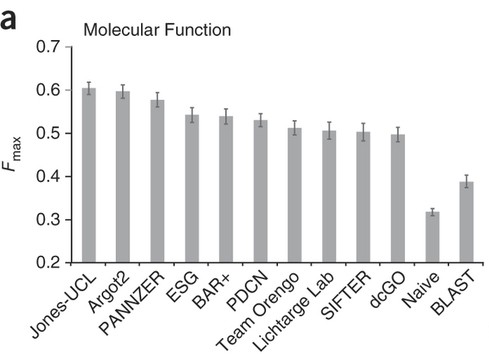

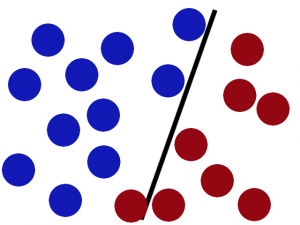

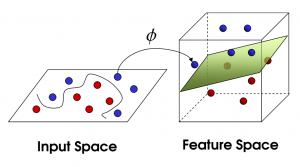

Growth of funder and institutional open-access mandates, Source: http://roarmap.eprints.org/

So is there a problem? Yes, several. First, embargo period. Green OA allows for an embargo period, which means that the final paper is not immediately freely available. Anyone wishing to read the latest and hopefully greatest achievements in science would still be stumped by a paywall. Of course, this goes back to the whole “who do I care who reads me?” question: researchers seem to mostly care about other researchers reading what (and where) they published. Those colleagues would mostly have access to the manuscript anyway. Second, self-archiving and preprint policies vary, even if a self-archived copy is available, it may take some effort to locate it, although Google Scholar seems to be doing a pretty good job in that department. Finally, publishers’ policy regarding preprints vary and are sometimes unclear, which can deter researchers from self-archiving lest they violate some policy. So green is not without its shortcomings, even without an embargo period.

In the UK, a 2012 report by The Working Group on Expanding Access to Published Research Findings supported Gold OA. It discounted the Green methods for many of the reasons stated above, and recommended the author-pays, immediate publishing model.

“If green is cumbersome, messy, involves assumptions about cooperation and investment in infrastructure, and still only delivers an imperfect version of the article, and then several months after publication, surely it’s better to pay for the final version to be accessible upon publication?” (Source)

On the plus side, no-one is really arguing anymore on whether we should even publish open access. OA is here to stay, and the questions asked today relate to degrees of accessibility and freedom to reproduce, and financial models to support OA. But the Canadian survey has brought this to the forefront: something is wrong in a scientific culture that has turned communication into coinage, and the disconnect between the values researchers profess (overwhelmingly pro open-access), and what they actually practice (OA counts for little when choosing a publication venue), is worrying.

So great are the rewards for publishing in top academic journals that everyone games furiously – authors, editors, universities and academic publishers… (Source)