Support Vector Machines explained well

Found this on Reddit r/machinelearning

(In related news, there’s a machine learning subreddit. Wow.)

Support Vector Machines (warning: Wikipedia dense article alert in previous link!) are learning models used for classification: which individuals in a population belong where? So… how do SVM and the mysterious “kernel” work?

The user curious_thoughts asked for an explanation of SVMs like s/he was a five year old. User copperking stepped up to the plate:

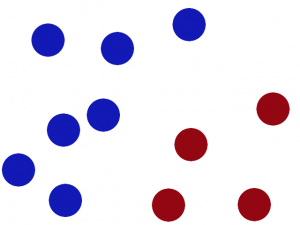

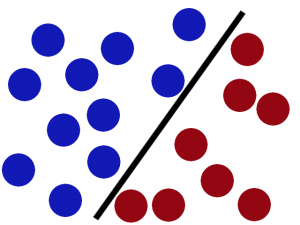

We have 2 colors of balls on the table that we want to separate.

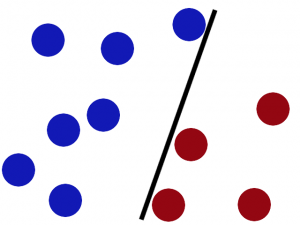

We get a stick and put it on the table, this works pretty well right?

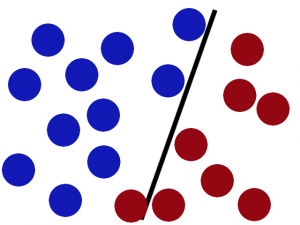

Some villain comes and places more balls on the table, it kind of works but one of the balls is on the wrong side and there is probably a better place to put the stick now.

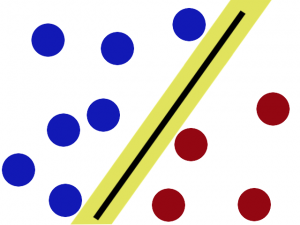

SVMs try to put the stick in the best possible place by having as big a gap on either side of the stick as possible.

Now when the villain returns the stick is still in a pretty good spot.

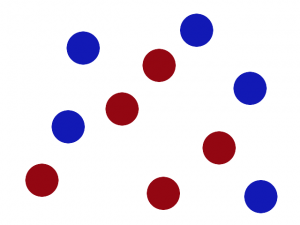

There is another trick in the SVM toolbox that is even more important. Say the villain has seen how good you are with a stick so he gives you a new challenge.

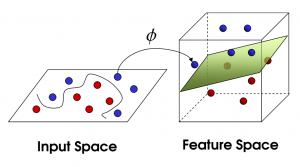

There’s no stick in the world that will let you split those balls well, so what do you do? You flip the table of course! Throwing the balls into the air. Then, with your pro ninja skills, you grab a sheet of paper and slip it between the balls.

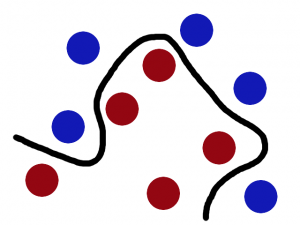

Now, looking at the balls from where the villain is standing, they balls will look split by some curvy line.

Boring adults the call balls data, the stick a classifier, the biggest gap trick optimization, call flipping the table kernelling and the piece of paper a hyperplane.

That was copperking’s explanation.

Related: Udi Aharoni created a video visualizing a polynomial kernel:

And, more recently, William Noble published a paper in Nature Biotechnology. You can access an expanded version here. Thanks to Mark Gerstein for tweeting this paper.

Happy kernelling!

Thanks for the explanation!